How I messed up a medium-scale refactoring

Suggie is a back end Clojure app that is responsible for maintaining eight collections of stutters. The collections live in Redis and are consumed by two front-end apps. What goes in which collections, and how, is governed by a number of business rules. For example, one kind of new stutter produces two entries in one of the collections: the first being the new stutter and the second being an old one selected by a Lucene search.

The collections and business rules were added one by one. I wasn’t vigilant enough about keeping the code clean as I added them. At a point where I had a little bit of slack, I decided to spend up to two ideal days cleaning up the code (and adding one small new feature). I failed and ended up reverting about 70% of my changes.

What have I learned (or, mostly, relearned)?

Let’s start before I started:

-

It’s clear that I let the code get too messy before reacting. I should have made a smaller effort, earlier, to clean it up.

-

In general, I find that switching out of the “coding register” into the “explaining register” (talking vs. typing) helps me realize I’m going into the weeds. Because we’re a two-programmer shop, with only one of us (not me) competent at the front end, and we’re under time pressure (first big release of product 3, going for series A funding), I worked on Suggie too much without discussing my changes with Colin.

-

Relatedly, pairing would have helped. Unfortunately, Colin and I are of different editor religions - he’s vim, I’m emacs - and that has a surprisingly negative effect on pairing. We need to figure out how to do better.

As I did the refactoring, I’d say I had two major failures.

Execution.

I read over the code carefully and made diagrams with circles and arrows and a paragraph on the back of each one explaining what each one was. That was useful. But what I under-thought was the trajectory of the refactorings: which ones should come first, which next, so as to provide the most “You’re going askew!” information soonest. (Alternately: get the most innocuous and obvious changes out of the way first, so that they wouldn’t distract/tempt me as I was doing the more challenging ones.)

Intent.

I realized there were four design issues with this code.

-

The terminology was out of date. (Bad names.)

-

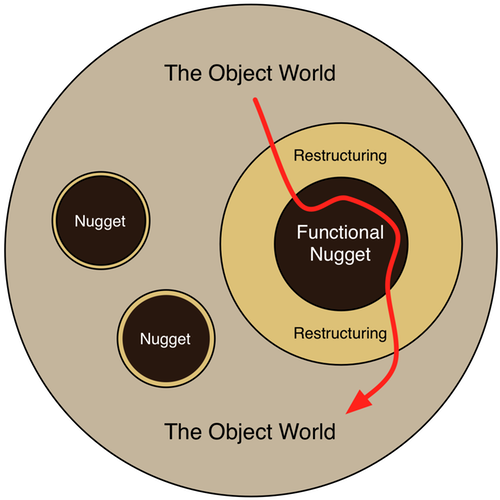

There was the oh-so-common problem that all the communication with Redis had gotten lumped into a single namespace (think “class”). The same code that put stutters into Redis hashes put ordered sequences of references-to-stutters into Redis sorted sets - and also put references-to-stutters into Redis plain sets. The code cried out to be separated into four different namespaces. Alone, that would have been a straightforward refactoring. But…

-

But there was also the problem that the existing code was inefficient, in that it didn’t make good use of Redis’s pipelining. I want to be clear here: our initial move to Redis was motivated by real, measurable latency problems. And the switch to Redis was successful. But now that we were committed to Redis, I fooled myself in a particular way: “Efficiency’s good, all else being equal. We don’t know that we need pipelining here, but I see a pretty clear path toward just dropping it in during the refactoring that I’m doing anyway. So why not do it along the way?”

Why not? Because, as it turned out, I’d have gone a lot faster if I’d first solved either problem 1 or 2 and then made the changes required to add pipelining. (That’s what I’m doing now.)

-

Much of our Redis code is not atomic, which needs to be fixed. I decided I’d also fix that (for this app) at the same time I did everything else. As I write, that seems so obviously stupid that maybe I should find another profession. However, I convinced myself that this new refactoring would fall easily out of the pipeline refactoring (which would fall out of the rearrangement refactoring). In retrospect, I needed to think more carefully about atomicity without assuming that I really understood how it worked in Redis. But, again, I assumed I could learn that as I went.

So I mushed up many different things: renaming, moving code to the right place, introducing more pipelining, and keeping an eye out for atomicity. My brain proved too small to keep track of them. I should have sequenced them.

In addition to all that, I noticed some other things.

-

I would have done better to spend an hour a day over many days, rather than devoting full days to the refactoring. Because I have a compulsive personality, I must be forced to take time away from a problem to make me realize exactly how far down a rathole I’ve gone. (Alternately, I need a pair to reign me in.)

-

I kept all the tests passing, and I kept the system working, but I made a crucial mistake. There was a method called

add-personal-X-plus-possible-Y. (The name alone is a clue that something’s gone wrong.) It was 16 lines ofif-madness. Instead of modifying it (while keeping the tests passing and keeping the system working), I kept the system working by not changing it. I added a new function that was intended to be a drop-in replacement for it - come the glorious future when everything worked. So there was no connection between “system working” and “tests passing” while I was doing the replacement. The new function could have been completely broken, but the system would keep working, because the new function wasn’t used anywhere outside the tests.This seems to me a rookie mistake, a variant of “throw it away and rewrite it”. But somehow I allowed myself to gradually slip into that trap.

-

I suffered a bit from relative inexperience with the full panoply of immutable/functional programming styles. What I’d written was C-style imperative code. Transforming it into object-oriented code would have been straightforward, given my familiarity with various design patterns. Figuring out how to do the equivalent transformation idiomatically in Clojure, given all the constraints I’d placed on myself, took me too long. I only really figured out how to do it after I’d pulled the Eject lever.

Here’s something that’s interesting to me. I spent many years as an independent process consultant. In my spare time, I wrote code. Because that was a part-time thing, I had a lot of leisure to put the code aside and listen to that small, still voice telling me I was going astray.

Things are different now. This real world job has only strengthened my belief in what I preached as a consultant. In particular, I believe that teams must have the discipline to go slow to get fast. And yet: I keep going too fast. These days, it’s markedly harder for me to attend to the small, still voice.

It’s an interesting problem.