Exploration Through Example

Example-driven development, Agile testing, context-driven testing, Agile programming, Ruby, and other things of interest to Brian Marick

| 191.8 | ⇒ | 167.2 | ⇒ | 186.2 | 183.6 | 184.0 | 183.2 | 184.6 |

Exploration Through ExampleExample-driven development, Agile testing, context-driven testing, Agile programming, Ruby, and other things of interest to Brian Marick

|

Mon, 27 Dec 2004Substitute "damn" every time you're inclined to write "very;" your editor will delete it and the writing will be just as it should be. That's from Mark Twain. I read it 25-30 years ago, and I cannot type the letters v-e-r-y without recalling it. It's (wait for it...) damn useful advice. I also remember another tip from Ralph Johnson, from back when I was his graduate student. I don't remember it as well, but it went something like this: Don't use the word "obviously". If the rest of the sentence isn't obvious to the reader, you've just insulted him. Why risk that? I never fail to hesitate just as I'm starting to type o-b-v...

## Posted at 11:35 in category /misc

[permalink]

[top]

The Powerbook G5 announcement has been canceled. Although my drive did die, I bought another disk instead of another Powerbook. P.S. I've decided to opt for convenience over expense. Henceforth, I'm going to make bootable full backups to a Powerbook-compatible 2.5" drive in a firewire enclosure, rather than to a partitioned big disk. Next time a drive dies, I'll move the drive: Up and running again in fifteen minutes. And if my drive starts making ominous clunking sounds, I won't hang around waiting for it to die. Plus the extra planning and expense will ensure that no disk ever dies on me again. Anyone else do that? Or do you have some other clever backup strategy? Mail me.

## Posted at 08:56 in category /mac

[permalink]

[top]

Wed, 15 Dec 2004Today is the 213th anniversary of the signing of the U.S. Constitution's Bill of Rights. That document means a lot to me. It's also a pretty good example of how difficult it is to write an ironclad requirements document, especially in natural language. It's a bit presumptuous, I know, but a lot of the text could have benefited from my first rule for revising. And it might have helped to have an appendix with examples / tests. ("So this policeman can magically see through walls. He claims that means he doesn't need a warrant. He's wrong because the whole point of the amendment is the bit about being 'secure in their houses'.") Oh, we're doing OK having a different group write the tests. But some sort of record of conversations around specific examples might have helped us understand how the writers intended us to think about the requirements and extrapolate from them.

## Posted at 13:20 in category /misc

[permalink]

[top]

Mon, 13 Dec 2004Powerbook G5 to be announced soon

My powerbook is making truly alarming clunking noises. Gosh, could

it be the disk? I'll probably be buying a new one soon, which

can only mean that some wonderful new model will be announced

in

## Posted at 20:45 in category /mac

[permalink]

[top]

As I've mentioned before, I sometimes hang out with Andrew Pickering, head of the Sociology department at Illinois. We've talked about Agile methods, which match well his analytical framework for explaining scientific progress (as put forth in his fairly dense The Mangle of Practice). As a result, he invited me to write a chapter on Agile for a forthcoming book on "the mangle." I produced a draft in February. It was mostly a detailed retelling of a refactoring episode in manglish terms. Lots of Java. As so often happens to me, the current draft is much different. Instead of being about the micro, it's about the macro: explaining Agile development to sociologists. So far, so boring. But I decided to end with a topic that intrigues me. Agile software development is not "businesslike". You've got a room full of programmers yammering to each other. And let's be frank: that room is messy. There's food all over the place. Maybe toys. Tables with 3x5 cards lying on them, programmers pushing them around like game pieces. Crude, childlike graphs on the wall. Since at least the '60's, business has been successfully domesticating programmers, and all that progress seems to have been lost. There's even a company where the dress code calls for ties, and the programmers on the Agile team have been given a waiver. That's the first step to the harder stuff. What now will prevent all that California-style New Age babbling about 'emergent design' from leading to web sites describing which crystals are best for writing PHP code? And office water coolers filled with "energy water"? I exaggerate. A bit. But the style and many of the beliefs of Agile development do not mesh well with what's traditionally thought of as the proper business workplace and practices. So why is it that I run into business people who love their Agile teams? What is it that those teams are doing right? Is it just that Agile projects deliver better ROI? I certainly hope they do, but that claim isn't proven. And I don't think it accounts for the distinct emotional response I've seen. And, in any case, those concerned with ROI are no more dispassionate utility maximizers than the average hairless ape, so there must be more to the success of Agile. Not that it's always successful. We so often hear the sad tale of a new VP coming into an Agile shop and destroying the Agile teams, typically for reasons that seem (to us) raw prejudice. Perhaps the reasons why some teams sell themselves well can help those teams that don't. Pages 8-10 of my paper give those reasons, so far as I understand them. But I could be wrong. And I've probably overlooked or incorrectly discounted some. So I'd truly appreciate reviews, which you can send to marick@exampler.com. I have both a PDF of the chapter and a Word version in case you want to put comments in the text. (Sorry, RTF users: Word mangles the document when it exports it to RTF.) I'd also appreciate comments on my description of Agility. I do use manglish jargon in that description, but in a way that I hope will still be meaningful for people who haven't read Pickering. (And I've also previously posted a description of the key terms.) (And I have a guilty feeling that I'm being unfair to Parnas and Clements in my description of their "A rational design process: how and why to fake it." If you think I am, let me know.) Thanks.

## Posted at 12:16 in category /mangle

[permalink]

[top]

Tue, 07 Dec 2004Austin Workshop on Test Automation Bret Pettichord is co-organizing the next Austin Workshop on Test Automation on open-source web test tools, January 7-9: Join us as we review and contribute to open-source tools for the functional testing of web-based applications. Help us understand the strengths and weaknesses of existing open-source tools and work with us to improve them, and make them easier to understand and adopt. The workshop will consist of presentations, discussions and actually sitting down and writing code, documentation, and examples. We seek participation from developers of open-source test tools, testers with experience using them, and people who want to learn more about how they can contribute to open-source efforts. I think it will be good, but I can't go. So you should go instead.

## Posted at 07:37 in category /testing

[permalink]

[top]

Mon, 06 Dec 2004The prayer of every true partisan From J.S. Mill, via Lionel Trilling, via John Holbo (in a discussion of one of Pragmatic Dave Thomas's alter ego's books), a "prayer of every true partisan of liberalism": Lord, enlighten thou our enemies... sharpen their wits, give acuteness to their perceptions and consecutiveness and clearness to their reasoning powers. We are in danger from their folly, not from their wisdom: their weakness is what fills us with apprehension, not their strength. Trilling comments: What Mill meant, of course, was that the intellectual pressure which an opponent... could exert would force liberals to examine their position for its weaknesses and complacencies. Isn't that a nice sentiment? And not just for political partisans. P.S. A duty to attend to an opponent's thought is not a duty to argue with that opponent. I've in the past shrugged and walked away from arguments. Citing this post won't shame me into resuming them.

## Posted at 11:35 in category

[permalink]

[top]

Thu, 18 Nov 2004Want to hire a Customer or VP of engineering? One of the depressing things about Agile is the frequency with which great teams and great projects are derailed by the arrival of new management with no sympathy for those weirdo processes. That's happened to a client of mine. Two of the victims have asked me to be alert for possible jobs in the San Francisco Bay Area. One is a manager of managers, and one is a product manager. The people who'd hire them are outside my network, but perhaps they're not outside yours. One, Mr. A--, was the Customer on a near-XP team. (His formal title was Senior Technical Product Manager.) Over the time I consulted with the company, he blossomed into the kind of Customer I'd want on my project. The other person, Mr. B--, was the VP of Engineering responsible for migrating five projects from torpid to agile processes. He was a good person to consult for (and he obviously has the taste to hire well...) I heard far fewer gripes about him than I'm used to, so I suspect he was also a good manager for employees. If you need either kind of person, drop me a line, and I'll forward a resume.

## Posted at 18:30 in category /misc

[permalink]

[top]

Wed, 17 Nov 2004How people add basements to houses In response to my note about adding a basement to a house, Lisa Crispin writes: On the basement analogy, I would just like to point out that my neighbor dug his basement out by hand some years after he bought the house. This was back in the 40s when there weren't really many power tools to help with this. He had a conveyor belt, buckets and a shovel. He paid a laborer with a wheelbarrow to take the dirt a couple blocks down to a gulch and dump it there (of course, you can't do this these days in the city either, but y'know, simplest thing...). He ended up with a nice finished basement. This is very common in our neighborhood. Most houses were built in the 20s and 30s with only crawl spaces, and dug out later (I guess it's amazing that the gulch is still a gulch!). Our own basement has been dug out TWICE, who knows when but certainly more than 40 years ago (the second dig was to fit in a big coal furnace), and is extra deep. So maybe the idea of building the basement first and then the house was a later innovation! ;-> Interesting. Before heavy equipment, the cost differential between basement before and basement after was probably much smaller. Just as technology can make house construction from the bottom up more compelling than it once was, technology (refactoring tools, lots of spare cycles for continuous builds, pair programming) can make software design from the top down less compelling.

## Posted at 12:23 in category /agile

[permalink]

[top]

Tue, 16 Nov 2004If a sentence is unclear, do not fix it by adding more words. Fix it by splitting it into two sentences. Then maybe add a third. If a paragraph is unclear, do not fix it by adding more sentences. First look earlier in the piece. Can you find a place to add a few sentences that will make the later idea clearer? Perhaps you can rule out an interpretation that will later cause confusion. Write text to head off the problem, then return to adjust the guilty paragraph. If an idea or procedure is complicated, don't add more words explaining it. Add an example. If the example is too complicated, don't add more words explaining it. Precede it with a simpler example, then change the explanation of the complicated example to focus on what it adds to the simpler one. If you use change tracking, turn display of changes off. You won't be able to make the new text read well if it's all mixed up with the old text. After you change a sentence, leave it aside for a while, then come back and reread at least the whole paragraph that contains it. Then tweak the sentence to make it fit better into its environment. How do you find what needs revision? Can you turn that bullet list into one or more paragraphs? Bullet lists are, on average, easier for writers but harder for readers. They're easier for writers because you don't have to worry about transitions between one idea and the next. They're harder for readers because there are no transitions guiding them from one idea to the next. Will their eyes glaze over because you're not providing them with a sense of flow? Read your text aloud. You don't have to write like you speak, but reading aloud changes your perspective. Awkwardness will jump out at you. Reading aloud is one way to get some distance, to separate the piece from your memory of writing it. Putting it aside for a day or, better, a week does the same thing. I find that reading a printed copy helps me see things I don't see on a screen. Can you find other tricks? Richard P. Gabriel tells the story of one writer who would tape his work to a wall, go to the other side of the room, and read it through binoculars. Print the piece with a wide margin on one side. Next to each paragraph, scribble a few words about the paragraph's topic. Now read the scribbles. Do they form a progression of thought, a developing story of explanation? Or are they more like a bunch of thoughts hitched together in any old order? If so, shuffle them into a better order. (Some people cut the paragraphs out and move them around; I usually draw arrows from where the paragraph is to where it should go. I suspect the other people do better.) Sometimes you read a piece where a particular secondary idea or clever chunk of text seems to have undue importance. It's almost as if the piece were distorted to find a way to make that gem fit. That's usually because it was. The gem came first, the piece grew away from it, but the author forced it to stay. Ask what your favorite bit of the piece is, then throw it out - or at least consider how the piece would read if you dropped it. I find this useful to do when I get bogged down during writing. (Inspired by about twenty years of writing badly, about ten of writing competently, and five years of getting paid to edit. Not inspired by any particular author.)

## Posted at 15:58 in category /misc

[permalink]

[top]

Mon, 15 Nov 2004Electronic voting machines: action can be taken Here are some comments by Cem Kaner, professor of computer science and attorney, on the move toward IEEE approval of voting machine standards that do not include a paper trail. He is a member of the standards committee and is not happy. Comments are excerpted from a semi-public mailing list, with permission. To set the stage, here's an excerpt from a note of Cem's: ... What puzzles me is why the IEEE is willing to associate itself with the development of a standard that pretends that non-recountable voting equipment is a reasonable, acceptable product. Which led to this response: Maybe the standard is being driven by the parties with a vested interest. My experience with IEEE standards is that most are driven by a small handful of people and are therefore easy to "drive" in certain directions. Something for those of us that vote on such standards to keep in mind when voting on this. ... and to Cem's longer reply, which includes some activities that we who care can take:

## Posted at 17:58 in category /misc

[permalink]

[top]

Who came up with the hurricane metaphor? Someone came up with the idea of using hurricane prediction tracks as metaphors for Agile project planning. Who was it? I want to give credit where due. UPDATE: It seems likely I heard it from Tim Lister at ADC 2004. UPDATE2: Clarke Ching knows more. He first saw it in Frank Patrick's blog in September 2003. Frank got it from James Vornov, who got the picture from Dave Rogers. Thanks, Clarke.

## Posted at 09:59 in category /agile

[permalink]

[top]

Sat, 13 Nov 2004Different disciplines have different cultures. There can be culture clash. How do you deal with that in an Agile project? A group of us addressed this question at a Scrum Master get-together. (We were Christian Sepulveda, Jon Spence, Michele Sliger, Charlie Poole, and me.) We focused on three more specific problems:

We recommend the following at the beginning of the project:

Throughout the project:

Clearly, we've only scratched the surface. In particular, I notice that we haven't got anything specific to the problem of people afraid of having to relinquish their disciplinary identities. That's a problem near to my heart, because it's one that comes up a lot with testers.

## Posted at 19:17 in category /agile

[permalink]

[top]

Summary of the cost of change curve There was a lot of email discussion about my post on the cost of change curve. A restatement of the problem: Assume a classic waterfall process. On March 15, you release version 1 of your product. On March 20, you start work on version 2. On April 20, an urgent change request comes in. Assume two choices:

Let's assume that certain of the work is the same in either case. You have to scour version 1's requirements documents, architectural design documents, design documents, and code for the implications of the change. You have to update each of them. (Remember, we are assuming the kind of project to which the cost-of-change curve applies.) You have to make the change and test it. So why would version 1 have a substantially higher expected cost? Here's what people came up with:

The agile methods are, in large part, about driving these costs down, it seems to me (and to others). Thanks to Alex Aizikovsky, Laurent Bossavit, Todd Bradley, Clarke Ching, Jeffrey Fredrick, Chris Hulan, Chris McMahon, Glenn Nitschke, Alan Page, Andy Schneider, Shawn Smith, Glenn Vanderburg, Robert Watkins, and perhaps others I forgot to record. Because of the underwhelming response to my quirky network invitations thing, I'd concluded the 120,000 hits my blog got last month were mostly due to two out-of-control news aggregators hitting my site once per minute.

## Posted at 12:20 in category /misc

[permalink]

[top]

Wed, 10 Nov 2004In principle, the product owner of an agile project could, at any moment, throw out all the backlog of stories and take the product in a completely new direction. For the book chapter I'm writing, I'd like to give a couple of examples of radical change. What's the biggest change to the backlog that you've seen? Obfuscate all details. I just would like to be able to say, "One correspondent told of 50% of the stories changing one rainy afternoon" or "... told how, in 2000, their consumer e-commerce site was redirected to become an air traffic control system." Mail me. Thanks.

## Posted at 10:59 in category /agile

[permalink]

[top]

Tue, 09 Nov 2004Adding a basement to the house Agile methods people claim changed requirements late in a project are not a disaster. Skeptics claim that's impossible, that it's like finishing the first story of a house and then deciding you want a basement. That's a misguided analogy. The reason putting in a basement after the walls are up is hard is because almost no one does it. If it was done to every house during construction, you may be sure that homebuilders would have learned to do it as cheaply as is physically possible. Agile projects don't think ahead: in iteration N, they don't pay much attention to what's coming in iteration N+1, much less iteration N+5. That means that every iteration brings with it a whole slew of what are, in effect, changed requirements. That trains both the software and the team to handle change as cheaply as is softwarically possible. It's like the way that just-in-time inventory management forces factories to improve their production process. Because they cannot buffer asynchronies with stock on hand, they are forced to remove them. (See The Machine That Changed the World.)

## Posted at 23:53 in category /agile

[permalink]

[top]

## Posted at 09:18 in category /misc

[permalink]

[top]

Mon, 08 Nov 2004Tim Van Tongeren has compiled an interesting list of voting machine bugs reported in the recent election.

## Posted at 09:49 in category /misc

[permalink]

[top]

Sat, 06 Nov 2004That ol' "n degrees of separation" thing When you're asking people to do work for you, especially unpaid work, it helps a lot if you already have a personal relationship. In staffing next year's OOPSLA essays track, I want to find committee members who are well known, interdisciplinary, and like novelty and change. I know some - never enough - people like that in software, but I know fewer outside software. So I'm going to do something quirky: diffuse invitations through a network. Here's how:

Thanks. Let's see what happens...

## Posted at 14:37 in category /oopsla

[permalink]

[top]

Mon, 01 Nov 2004The program chair for OOPSLA 2005, Richard P. Gabriel, wants to shake things up. As part of that he's going to institute an Essays track, and I will be program chair for that track. I'm hunting for people to serve on the committee. The essays don't have to be original research, the usual OOPSLA fare. Instead, they'll be of two types.

To that end, I'd like to get committee members from both inside and outside the field. When they come from inside, I'd like them to have serious knowledge of some outside field. I welcome suggestions.

## Posted at 09:38 in category /oopsla

[permalink]

[top]

As an editor for Better Software magazine, I sometimes give authors the old fiction-writers' advice "show, don't tell". The writer Robert J. Sawyer has written a nice, short essay on it (though I think the first example overdoes it). I particularly like this essay because it itself shows how the maxim applies to nonfiction writing. Sawyer begins with an introduction to the idea, sketching out the rule. Then he shows a series of negative and positive examples, presenting both and then offering commentary. He shows, then tells. P.S. As always, I need writers for some department articles. They are:

The official timing for the next open slot has a first draft due November 15, but I have some slack to slip that.

## Posted at 08:50 in category /misc

[permalink]

[top]

Fri, 29 Oct 2004(Or, "Just Another Boring Romantic, That's Me")In one day at OOPSLA, I saw three keynote-ish talks. The first was by the head of Microsoft Research. I'm sure he's a good fellow - most everyone I've met from Microsoft is - but it was just like a talk from every other high profile Microsoft presenter I've seen, down to that odd Gatesian way they have of gazing raptly at the person they bring on stage to demo something or other. It struck me as mostly a litany of More: more storage, more bandwidth, more cameras attached to more bodies, more visual editors to handle more complexity, more RFID chips in more places. More, more, more. I found it profoundly depressing, the moreso for the answer when someone asked about privacy: "That's a hard problem" (repeated an uncomfortable number of times). No doubt so, but perhaps researchers ought to tackle such hard problems. I do not anticipate more privacy. Ward Cunningham gave the best talk I've heard him give - a set of stories about the many Big Things that he's helped create: CRC cards, design patterns, wiki, XP. There were threads running through all the stories. Active waiting for flashes of insight. Building on luck. Simplicity. Communication. Courage. Attention to the physical world and the emotional world. Active awareness of others. The Microsoft Research talk was mostly about piling things on top of things. Ward's was a story of things supporting people who change things that change people that.... Ward's is a story that, pound for pound, dollar for dollar, is more world-changing. Alan Kay's Turing Award lecture was about three things: the power of a simple idea pursued relentlessly (the meme trail from Sketchpad and Simula to Smalltalk to Squeak), the as-yet-untapped potential of the computer, and our responsibility to our children to give them learning opportunities we couldn't have had. His demos were cooler than the Microsoft ones. For a bit, that puzzled me. A searchable catalog of sky pictures and galaxies is cool: I plan to show it to my children. Being able to view most any location in the United States down to incredibly fine granularity is cool. So why is an ancient video of flickery black-and-white Sketchpad cooler? Why are Fun Manipulations of Two-Dimensional Objects in Squeak cooler? Why are not very detailed three dimensional moving objects so awesomely cool that I called my wife just to babble to her about it and say we had to teach our children Squeak? I think it's because in the Microsoft Research world, we're observers, consumers, secondary participants in an experience someone else has constructed for us. In Alan Kay's vision, we're actors in a world that's actively out there asking us to change it. A world like that Cornel West says Ralph Waldo Emerson's was:

P.S. I feel bad saying this about the Microsoft Research guy. He can't help it that he doesn't have the vision of people like Ward and Alan Kay (or, if he does have it, can't express it). We're all just people, mostly muddling along the best we can. But Lord, I wish the genial humanists like Ward and the obsessive visionaries like Alan Kay had more influence. I worry that the adolescence of computers is almost over, and that we're settling into that stagnant adulthood where you just plod on in the world as others made it, occasionally wistfully remembering the time when you thought endless possibility was all around you.

## Posted at 13:30 in category /misc

[permalink]

[top]

Thu, 28 Oct 2004Two more charts, both burnup charts instead of burndown. One from Wayne Allen. I like it because I like area graphs more than bar charts. Ron Jeffries has updated his very nice article on Big Visible Charts with a burnup chart like Wayne's, though pleasingly hand-drawn instead of in Excel. (I'm serious: I'd do hand-drawn if I could get away with it. For one thing, the extension of the arc could be part of an end-of-iteration ritual. And crudity of presentation reinforces the uncertainty of the prediction.)

## Posted at 09:37 in category /agile

[permalink]

[top]

"Methodology work is ontology work" posted Now that I've presented my paper at OOPSLA, I can post it here (PDF). Here's the abstract: I argue that a successful switch from one methodology to another requires a switch from one ontology to another. Large-scale adoption of a new methodology means "infecting" people with new ideas about what sorts of things there are in the (software development) world and how those things hang together. The paper ends with some suggestions to methodology creators about how to design methodologies that encourage the needed "gestalt switch". I earlier blogged the extended abstract. This is one of my odd writings.

## Posted at 09:37 in category /ideas

[permalink]

[top]

Mon, 25 Oct 2004I'm at OOPSLA. Today, I was at a workshop on the Customer role in Agile projects. A group of us tried to write down problems and solutions we've seen customers having and using. I like the results. Here they are. Note: I fancied up the problems and solutions with a running narrative. Of the rest of the group, only Jennitta's seen even a fraction of what you see. So what I say may not be an accurate record of what someone meant. But I have deadlines to meet (and miles to go before I sleep), so this is going to go into hardcopy without their review. We may fix it up later. Nickieben Bourbaki Is a Customer on an Agile Project.

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Something

about the problem |

Something

about solutions |

| Nickieben was originally

consumed with fear. The project

seemed far too much work to complete in the time allowed, and he would be responsible when it

failed. |

Time

was the solution. As iterations delivered visible business

value, he showed some of it to his Lords and Masters. They were pleased

with the progress, so he grew calmer. As the business environment

shifted, they changed the product direction, and Nickieben and the team

showed they chould change with it, which further pleased the L&M's.

It would have helped Nickieben a lot if he had had a support group of other Customers who could tell him what being a Customer was like, but he didn't. |

| Planning

meetings lasted way too long. Many people were uninvolved for

big chunks, and the meetings seemed to drain the energy out of the

whole team. And, for all that, the resulting estimates were not very good. |

Nickieben started having "preplanning" meetings the iteration

before. In them, he, a tester, and a programmer would discuss a story,

write some test sketches, and make an initial estimate. People came to

the planning meeting prepared for a short, focused discussion that

informed the rest of the team and asked them to look for errors in the

estimate. Nickieben's since discovered that other teams also do preplanning. The meetings vary in form, membership, the thoroughess of the discussion, etc. For example, one team had some analysts who spent the iteration ahead of the programmers predigesting the requirements, learning how to explain the domain (which was very complex), and writing tests. Nickieben doesn't think there's one right way to do it, but he does now have a motto: "Meetings must be snappy". |

| Early on, it seemed that the programmers focused much more on the

technical tasks that made up a story than they did on the story

itself. It seemed that the tasks were therefore inflated: the

programmers did what "a complete implementation of task X" meant,

rather than just enough to make the story work. |

Nickieben kept harping on the

stories as the thing that mattered to him, not tasks. He learned to

pare stories down into small "slices"

that stretched from the GUI, through the business logic, down to the

database. As the programmers got used to making one slice at a time

work, they learned that they didn't have to write lots of

infrastructure up front. |

| At first, Nickieben was indecisive about prioritizing stories.

He couldn't decide among the different stories that might go into the

next iteration. |

Short

iterations helped after one of the programmers pointed out that

any scheduling mistake he made could be corrected in less than two

weeks. So the cost of getting something wrong wasn't too big. He forced himself to prioritize by writing down the cash benefit of each feature. Now he didn't have to decide which of two features was worth more; instead, he independently decided on worth, then used the cash benefit to pick. He'd started out using a spreadsheet to track the backlog of stories, only writing them on cards when he'd decided on what should go in the iteration. Later, he switched to writing everything on cards. When it came time to thinking about planning, he'd spread the cards out on a table and push them around. Important cards went "up" (farthest from him), and the lesser cards went down. He clumped related cards together, and sometimes a batch of cards made a theme for the iteration. He also found that he could sequence cards so that an iteration's set of stories all supported a particular business process. |

| Early in the project, Nickieben

often found himself frustrated that "finished"

stories weren't what he thought he was going to get. It was hard

to think of everything he needed to tell the programmers; so much of

what he did automatically had to be remembered and put into words. And

he'd explain things, and the programmers would think they understood,

and he'd think they

understood, but it would turn out they hadn't. |

He sketched tests up front. Instead of

just explaining in words, he found himself writing more and more

concrete examples on the whiteboard. Discussing those seemed to prompt

him to remember steps or issued he'd otherwise forget. During the iteration, he also spent more time checking in with the programmers, instead of waiting for them to come to him with questions. He especially spent more time with the "GUI guy", talking about what he wanted the GUI to do, and how it did it, and sketching out examples of usage as tests. |

| As he moved toward more

examples, Nickieben started making

the examples too complicated. He produced one example that

illustrated all the inherent complexities of its story's bit of the

business. |

He learned to start with the simplest possible example.

Then he added one scenario or

business rule at a time. In a way, he used the examples to

progressively teach the programmers, and they used them to

progressively teach the code. |

| He was sometimes surprised by the technical implications of

his ideas. Once, a simple "let's put a Cancel button on the

progress bar" led to all sorts of scary talk about transactions and

undoing. He was uncomfortable not knowing whether something would be

simple or hard. |

For a time, he got the help of an analyst who bridged the business

and technical worlds. That person helped him understand how big

a decision was. But more: her technical knowledge and experience with

similar applications allowed her to suggest considerations he would

never have thought of. He also enlisted the programmers for lightweight training. He had short conversations about what they had to do to implement a story. (Some of the programmers were much better at this explaining than others.) Over time, those short conversations added up to a decent enough high-level understanding of the system. The programmers also got better at coping with change. As they worked more with the system, it got more pliable, so the "internal bigness" of the change more often - but not always! - corresponded to its "external bigness." Programmers also learned more about the business domain, so they could say, "Are you going to need X, Y, or Z? Cause if you do, it would probably be better to schedule those things early." Eventually, the team didn't need the analyst any more. All of them were analysts, a little. |

| There was a time when Nickieben

felt cleanup was taking over control

of the project. Parts of the system were old legacy code. When

he started giving stories for that, it seemed like every story led to

some technical task that was more than an iteration long. Everything

seemed to lead to a huge refactoring. |

Nickieben learned how to write stories in small slices, about one

day's work or so each. And the programmers learned how to do the

big refactoring one slice at a time, such that each story led to

somewhat better code and enough stories would lead to really good code.

They also made information radiators to track "technical debt". Sometimes the programmers couldn't see a way to make an improvement in the time they had - even with their greater experience, it seemed like the refactoring had to be a big chunk. Whenever a programmer left the code worse than she thought she should, she wrote it up on a card and put it on the Refactoring Board. At some point, Nickieben would start getting nervous that the messiness would start slowing the team down, so he would sanction some specific cleanup time. Nevertheless, they tried to tie each refactoring to something useful, like a small feature of a bug fix. The programmers' editor also let them visually track the number of "todo" items they'd left in the code, which was another stimulus to clean up. |

| In the end, Nickieben's project

was a big success. The date did slip a bit, and the Lords and Masters

didn't get everything they'd wanted from the release. But they'd

changed business direction right in the middle, and the team had coped

well and still produced a solid, salable product. Looking back,

Nickieben is amazed at the difference between him then and him now.

He'd started out floundering, practically on the edge of a nervous

breakdown. While he still wouldn't call his job easy, he knows he can do it. The

only problem is that he knows there are people just like he was nine

months ago. And just like he had no

support group, they still don't. So they get to learn it all again, the

painful way. |

Maybe this page will help. |

| One last thing: Nickieben has to serve multiple masters: there are different interest groups who care about what the product does. There are two different classes of users, one very demanding buyer, operations, customer support, and so on. He has a lawyer friend who says it's common knowledge among lawyers that someone trying to represent multiple interest groups usually gets trapped by one or two and under-represents the others. Nickieben worries that he's doing that. | He isn't really sure what to do

about it. He thinks that linking each

interest group to a persona

(as used in some styles of user-centered design) might help. He

imagines putting big pictures of the personas up in the bullpen would

keep them in his (and everyone's mind). But he wishes he had better ideas. |

## Posted at 17:38 in category /agile

[permalink]

[top]

Kelly Weyrauch has posted another variant of a burndown chart. Here's

my version of it.

What I like about Kelly's chart is that it marches steadily down to a release date that stays on the X axis. But it's easy to see whether work's added or removed by looking at how the bars change height.

My chart differs from Kelly's in that I removed some extrapolated lines he uses. I like to get away with as few predictions as possible. To emphasize that, I hand-drew the one prediction. That makes the line seem less authoritative and believable than one Excel draws, which is appropriate.

(One of my proudest moments back when I had a real job was in 1985 or so, when I was first charged with scheduling a project. I had to predict out about nine months, using an early version of Microsoft Project. In response, I invented Schedu-Sane®. It was a sheet of plexiglas you would lay over a printed schedule. The left side would be clear. But, as your eye travelled further to the right, forward in time, the plexiglas would become cloudy and warped, making it hard to see the predictions underneath - as is appropriate. Schedu-Sane was never constructed, but I told managers about it whenever I showed my schedule, thus reinforcing my reputation for eccentricity.)

I don't know whether I like Mike Cohn's or Kelly's chart better. I certainly don't have the experience to speak with authority about burndown charts.

## Posted at 09:26 in category /agile

[permalink]

[top]

What? - Gut? - So what? - Now what?

Esther Derby ran a BoF on retrospectives. She's written up her notes.

One of the things she talked about was a model of communication that she uses. It's summarized by the four questions in this posting's title. At a recent meeting, I compared something I'd just heard to those four questions and was inspired to do something I haven't done in many years: get so uncomfortable speaking to a group that I had trouble finishing.

Believe it or not, that's a recommendation. I have a fear of spending so much time in my comfort zone that I turn into one of those dinosaur consultants I scorned when I was young and fiesty. (Still do, actually.) I get uncomfortable whenever I notice I've been comfortable for a while.

## Posted at 09:00 in category /links

[permalink]

[top]

Mike Cohn has a variant on the burndown chart that I like.

## Posted at 20:11 in category /agile

[permalink]

[top]

Ed Felton has two posts on Diebold voting machine protocols (here and here). Unless there's a misunderstanding somewhere down the line, well... I think that even I could have done a better job, and I'd sure never hire me to do security design.

## Posted at 14:40 in category /misc

[permalink]

[top]

I'm in Boulder, Colorado, at a Scrum Masters meeting. Yesterday, I was in a session on how to deal with problems around cross-functional teams (such as teams with programmers, testers, technical writers, and interaction designers). It didn't go so well, largely because of my inept moderation. But I have synthesized some of our ideas about the dynamics of successful cross-functional teams into this abstract diagram:

Here's the way it works. You have people from various disciplines who you need to work together toward a common goal. In many ways, disciplines are cultures: they have shared values, goals, languages, self-images, and such. Cultures are resistant to change. So melding these people into a team can be hard.

It's first important to provide the team with a shared goal, which is to provide external value. People should always be able to articulate how their current task ties into the delivery of specific value to those paying for the project. I suspect that a properly running team will develop a shared language that they are all comfortable using when talking about their goal. (This would be one of Galison's creoles.)

But I don't think the shared goal and shared language are enough. I think they will also develop tools (in the broad sense) that are used across disciplines. I think of these as boundary objects in Star and Griesemer's sense: things that people can use to further a common purpose while not having to agree on their meaning. Test-first customer tests are a good example: a customer might think of them mainly as an explanatory device, a tester might think of them as a tool that covers the whole breadth of a problem and lets no important detail go undiscussed, and a programmer might think of them mainly as a way to break programming down into small chunks.

Such "unshared tools" work if they allow the different people who use them to achieve what they value. Until the glorious day when disciplines wither away and everyone knows enough about everything (which I do think would be a glorious day), members of a discipline must feel valued in the terms that their discipline defines. When a tester and a programmer work together, the tester must feel valued as a tester, and the programmer must feel the tester delivers value as a tester.

Where this exchange often falls down is in the recipient's feelings. The programmer might not see value coming from the tester: she might see an imposition. A team needs to have a shared ethic that each person acts to serve others - not through "tough love" or while thinking "this is for your own good, and you'll thank me when you're grown up", but in terms the recipient values.

It seems to me that this would work best in a gift economy of the sort described by Marcel Mauss, one where a person's status depends on how much she gives to others. A Scrum Master would want to show the team a way to an internal gift economy.

But perhaps the most important thing is positive feedback, feedback that reinforces desirable behavior and attitudes. That feedback comes from shared activities: two or three people sitting down to accomplish some task. People from one discipline will help people from others, thus building respect and a common language. As is typical of Agile projects, we want such tasks to be frequent and completed quickly. That maximizes the number of feelings of shared accomplishment and allows lots of room for adjustments and experiments. For help to be valued, the tasks have to be real ones, the kind that are valued outside the team (presumably because they deliver business value).

Special thanks to Christian Sepulveda, Jon Spence, Michele Sliger, and Charlie Poole, who (except for Jon) are not to blame for the odder parts of the above. The rest is Chet's fault.

## Posted at 14:30 in category /agile

[permalink]

[top]

I don't think I have a firm grasp of XP's metaphor. Nevertheless, I am taken with the idea of using a guiding metaphor to encourage cohesive action. So what about a guiding metaphor for testing in Agile projects?

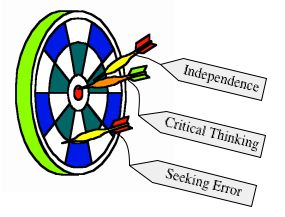

The picture on the right is my idea of conventional testing's dominant

metaphor. The tester stands as a judge of the product. She acts by

tossing darts at it. Those that stick find bugs. The revealing of

bugs might cause good things to happen: bugs fixed, buggy releases

delayed, a project manager who sleeps soundly at night because she's

confident that the product is solid.

The picture on the right is my idea of conventional testing's dominant

metaphor. The tester stands as a judge of the product. She acts by

tossing darts at it. Those that stick find bugs. The revealing of

bugs might cause good things to happen: bugs fixed, buggy releases

delayed, a project manager who sleeps soundly at night because she's

confident that the product is solid.

When talking to testers, two themes that often come up are independence and critical thinking. Critical thinking, I think, means healthy skepticism married to techniques for exercising it. Independence is needed to protect the tester from being infected with group think or being unduly pressured. A fair witness must be uninvolved to get at the truth.

There's no question in my mind that human projects often need people governed by this metaphor. But what about one type: Agile projects? Some say conventional testing exists, it's understood, and Agile projects need to import that knowledge.

I agree. We need the knowledge. But maybe not the metaphor and the attitudes that go with it. Independence and - to some extent - error-seeking are not a natural fit for Agile projects, which thrive on close teamwork and trust. Is there an alternate metaphor that we can build upon? One that works with trust and close teamwork, rather than independently of them? Can we minimize the need for the independent and critical judge?

I think so. Other fields have ways of harnessing critical thinking to trust and teamwork.

Latour and Woolgar describe scientific researchers as paper-writing teams (Laboratory Life). As the paper goes through drafts, coworkers critique it. But the critiques are of a special sort. They are often couched as defenses against later attack: "Griswold is obsessive about prions. If you leave this part of the argument like it is, he's going to go after it." They often include trades of assistance: "The last JSTVR reported a new assay. You need to run that, else you'll be dated right out of the gate. I can loan you my technician."

My wife, who is a scientist, confirms this style. In her team, certain people have semi-specialties - one professor is good at statistics, so he wields SAS for other people, and I get the impression that my wife is strong at critiquing experimental design - but they all work together in defense of the growing work.

I think it's interesting and suggestive that all of these people are producers. Despite being a referee for a seemingly infinite number of journals, my wife isn't just a critic. She's also a paper writer.

Richard P. Gabriel describes writers' workshops in Writers' Workshops and the Work of Making Things. A writers' workshop is an intense, multi-day event in which a group of authors help each other by closely reading and jointly discussing each others' work. Note that, like the scientific papers, the work is not complete. The goal is to make it the best it can be before it's exposed to the gaze of outsiders. Trust is important in the workshop; there are explicit mechanisms to encourage it. For example, the group discusses what's strongest about the work before talking about what's wrong. And, again, everyone in the workshop is a producer (at least in the traditional workshops, though not necessarily as they've been imported into the software patterns community).

So, with those examples in mind, I offer this picture of an Agile

team. They - programmers, testers, business experts - are in the

business of protecting and nurturing the growing work until it's

ready to face the world. (To symbolize that, I placed a book in the

center, which is where herd animals like elephants, bison, and musk

oxen place their young when danger threatens.)

So, with those examples in mind, I offer this picture of an Agile

team. They - programmers, testers, business experts - are in the

business of protecting and nurturing the growing work until it's

ready to face the world. (To symbolize that, I placed a book in the

center, which is where herd animals like elephants, bison, and musk

oxen place their young when danger threatens.)

Notice that the dinosaurs are all of the same species. Even though some of them might have special skills (perhaps one of them knows SAS, or was once a tester), they are more alike than they're different. They're all involved in making the weak parts strong and the strong parts stronger. (The latter is important: it's not enough to seek error; one must also preserve and amplify success, and spread understanding of how it was achieved throughout the team.)

P.S. Malcolm Gladwell wrote an interesting discussion of the virtues of group think.

Illustrations licensed from clipart.com.

## Posted at 23:22 in category /agile

[permalink]

[top]

I'm visiting the SF bay area for the next two weeks. I'm free over the weekend of the 2nd if anyone wants to hang out together, talk shop, or code. Let me know. Only catch is that I'm thinking of never renting a car, partly so I blend in with the native Californians, but mostly because it seems wasteful to have my client pay for a car I'll hardly use. So you'd have to pick me up.

## Posted at 08:53 in category /misc

[permalink]

[top]

The second chapter of my draft book, Driving Projects With Examples, is now available. For some reason, it was tough to write. Hope it's not too tough to read. Let me know if it is, and if I've missed something in this topical summary. Here's the abstract:

We're about to launch into details. Those details will make sense if you understand the key themes of example-driven development. The Introduction was supposed to highlight those themes and give you an understanding of how a project operating according to them should feel. In this chapter, I cover them more explicitly.

## Posted at 16:14 in category /examplebook

[permalink]

[top]

A long time ago, I gave up teaching conventional courses. You know the type: two or more days, 25 people in a room, lecture + lab + discussion. That works OK for learning a programming language or tool, I guess, but it doesn't work for what I do. My people have to go back and apply something like programmer testing to big problems. The fraction they retain from a course doesn't help them enough. Because they have trouble getting started, they don't, so nothing changes. Money wasted. Time for the next course on some other topic (since programmer testing clearly doesn't work).

So nowadays, when people ask me for training, I give them consulting. The actual lecture is short: I prefer half a day. After that, I sit down and work closely with real people on real problems. My goal is to get a core set of opinion leaders properly started, then let them train everyone else.

I've added a new twist, inspired by some co-consulting I've done with Ron Jeffries. We visit the client together, sometimes work with people together, sometimes go our separate ways, but always get together in the evening to talk about what we've seen and what we would best do the next day. Our sum is greater than our parts.

The new twist is that I now want to co-teach my consulting-esque courses. To that end, I announce two courses:

For more details, you know where to click. I imagine we won't teach many of these, what with persuading people to pay for two instructors and our varying travel schedules, but I bet the ones we do teach will be good.

## Posted at 18:01 in category /misc

[permalink]

[top]

Thoughts on Andy Schneider's comments

(Andy Schneider commented on my post on cybernetics and Agile methods. I've finally gotten a spare moment to respond.

Andy's right that agile teams are too often inward-looking. But I don't think that's a reason to avoid using the team as a unit of analysis. One way to talk about a system is to find useful components and look at their interactions. (That's not to say that the team is the only useful unit of analysis; it might well be instructive to slice things along a different dimension.)

I agree with Andy that Agile teams err when they think the only feedback that matters is instructions coming in and software going out. That's one of the reasons why I was so taken with Pickering's descriptions of devices reaching out, actively exploring their environments, and adapting to them. I think that's what Andy wants, and I'm suggesting cybernetics might have learned something we can steal.

I wasn't at all clear in my description of "teams succeeding in their own terms." By that, I meant to suggest that the team is delivering what some representative of the business said was business value, but that either the representative was wrong or someone with more power wasn't interested in that business value. So the project gets canned because it wasn't adapted to its real environment, only to an economic fairy tale: the corporation run by profit-maximizing economic actors.

## Posted at 16:59 in category /agile

[permalink]

[top]

Here's my position paper for the OOPSLA 2004 workshop on the Customer Role in Agile Projects. I'm a little dubious about the position I take, but what the heck. It'll lead to a better one.

Here's a summary of 3394 recent mailing list threads:

Skeptic: How can one person *possibly* do everything expected of the XP Customer!?

Ron Jeffries: The XP Customer isn't a person, it's a Role.1

By that, he means that the Customer Role can be realized by a mix of people who work things out amongst themselves and then speak with One Voice. He's right. Let me say that again: He's right. But.

But doesn't it work ever so much better when it is a person? And when it's the right person?

My contribution to the workshop will be to ask us to pretend, for a short time, that we can require that every Agile project have a bona fide business expert sitting in the bullpen with the rest of the team. That person is the focal point of the programmers' work. They are oriented toward making her smile in the same way that a compass needle is oriented north: forces may sometimes push it away, but it wants to swing back.

The objection remains: that one person can't do it alone. So the first question is: what kind of skills is she likely to lack? Three related ones come immediately to my mind:

the skill of explaining herself well.

the skill of introspection, of realizing what part of her tacit knowledge has to be made explicit. (This is the skill of knowing what she's forgotten she knows.)

the skill of creating the telling example, that being one that both helps her explain what she wants and is also readily turned into an automated test.

It would be nice to have a more complete list. It'd also be nice if we collectively had some way of teaching those skills, other than stamping "Customer" on some poor accountant's head and tossing her into a writhing mass of programmers. (We might not teach those skills to the official Customer; we might instead surround her with the right support staff.)

Given skills, the next question is: what kind of personality turns programmers, especially, into compass needles? Here are some traits I think a Customer should have: an eagerness to help that leads her to immediately turn aside from her task when a programmer has a question; a respect for others' expertise; a touch of vulnerability; curiosity; patience; enthusiasm.

Perhaps companies could use a list of such traits to choose the most effective person to be Customer, not just the person with the most domain knowledge or the person who cares most about the product (or the person who's easiest to detach from her real job).

I hope we can talk about these things. Customers - the people sitting in chairs, not the Roles - need all the help we can give them.

1 Rumor has it that F7 on his keyboard pastes that sentence into the current window.

## Posted at 10:45 in category /agile

[permalink]

[top]

Andy Schneider had some interesting comments on my cybernetics post. I'll comment on his comments after I get through various pressing business. The rest of these words are Andy's, reprinted with permission, except where he's quoting me.

I've worked on a bunch of broken and working agile projects and when I was reading your missive it kept taking me back to stuff I've observed.

I'm picking the bullpen as the unit of adaptation because I want to talk myself out of the notion that everything that matters is in the heads of the team members - some of it is "in" the configuration of the room, the Big Visible Charts, the source code, the Lava Lamps, the rituals that people take part in and the rules they follow and reinterpret...

When I read this I read the 'team' as the development team, the people cutting code and probably the customer representative. The definition is very dev centric (BVC, Lava Lamps, source...). When teams buy into this perspective I think they are already in trouble. These teams are often have the following traits:

They are not managing the 'group think' that arises from teams that bond and look inwards rather than outwards.

They see their team as the 'agile' team surrounded by a hostile, non-agile or problem environment (reinforcing the group think and introversion).

They fail to realise that feedback loops need to be in place between all the suppliers/providers and themselves, not just in the ways drawn out in the XP book - i.e. they just don't get the feedback part.

The best agile people I see working view the team as encompassing people involved in the end to end process. Furthermore, they view agile as part of a multi-paradigm approach to the entire constructin process rather than as the proverbial hammer. I do a brief and not very profound short talk in scaling agile and I spend 50% of it ramming home the need to make business engagement work and the other 50% discussing how you dovetail your agile dev team into the corporate environment in a way that is acceptable to all. In my mind these are two key cornerstones to an agile project, often missed in the rush to pair programming, lava lamps and A3 charts on the walls.

You then go on to say:

...and others appalled by how often successful-in-their-own-terms Agile projects get taken down by organizational struggles and interests are trying to figure out how to convert receiving organizations into something Agile enough to really use their Adaptive Bullpens well.

The fact the Agile projects believe they need to convert other parts of the organisation suggests a few things:

they haven't figured out how to adapt their external facing image to the needs of the host.

conversion... sounds awfully religious to me (slightly tongue in cheek).

The projects that later get taken down don't deliver busines value so their going in position, that the agile model they established would deliver business value, was probably mistaken - or at least its naive application was.

The fact that some people are even defining success 'in-their-own-terms' seems a bit of an issue with an organisation where success is probably defined in very different ways.

Of course, it can be argued that true success can only be achieved when the entire business is agile, but I think that's an oversimplification. Whilst some agile projects may be too passive, I have found many are too introverted and focussed on the 'one solution'. What we really need is not the establishment of agile development teams with a 'customer on site' but the realisation that agile is an approach, that needs to be tailored to the host environment and that must be driven by people who see the 'team' as the people in the end to end process chain, not just dev and the customer rep. Let's break down this insularity and start to see the bigger picture.

Andy S

## Posted at 14:46 in category /agile

[permalink]

[top]

On the Agile-Usability list, I asked for tricks:

I'd like to hear some advice to programmers, testers, and others on agile projects about how they could get a bit better at those things that the interaction design (etc.) people are really, really good at. Those things should be absorbable and try-able without a huge investment in time.

Dave Cronin (who says he loves to get mail), responded with this nice list:

Make all decisions within the context of one or more specific user archetypes (personas, actors, whatever you want to call them) accomplishing specific things (scenarios, goals, use cases, etc).

Express what the user is trying to accomplish in English. For example, if you have a complex form, first try to describe what is being specified in sentences. Then use the sequence of sentences to order fields in the layout and use the nouns and verbs from the sentences to label fields.

Focus on goals, not tasks. Goals are the end result that users want to achieve-- tasks are the things that get them there. Sometimes being overly focused on the tasks makes you lose the forest for the trees. Even if you can't do the bluesky design where you cut out a bunch of unnecessary tasks, focusing on goals will still help you express things in a way that a user will understand.

Use a grid for layout. Seems obvious, but its amazing how often I see screens layed-out with no order whatsoever. Look no further than the front page of the Wall Street Journal or any of a number of other newspapers for how to fit a ton of information of varying importance into a compact space.

Use color sparingly. A couple colors used judiciously can really make a screen come alive. Using five colors haphazardly makes you screen look like salad.

Optimize for the common case, accommodate the edge cases

Rough out a framework before you try to lay out every button and field. Work with the big rectangles and push them around until things start to fit. Test layout with a variety of possible controls, think of the worst case situation, make sure things degrade gracefully. Then when it seems like it will work, go ahead and extend your framework by laying out all the specifics. As you all know, things change all the time. A solid framework can accommodate these changes, meaning you will rarely have to restructure your interface after you refactor.

Thanks, Dave.

## Posted at 10:41 in category /agile

[permalink]

[top]

I've been worrying about testing in agile projects for about three years now. I started by wondering how people like me could fit into an agile project. Then, as I saw more and more programmers and, occasionally, product owners performing testing tasks, I came to focus more on the testing role: what are its goals? what are its components? what skills comprise it? how is the role distributed amongst the team?

Since the beginning of the year, I've been wondering less about what specifically has to be done and more about how a team evolves such that those things just naturally get done - or, if they don't get done, how the team recognizes that and corrects itself.

I've been thinking that a team has to have the right traits - in a way, the right personality. Individuals, I'm thinking, act as "carriers" of those traits. In the right circumstances, a person's traits will "infect" the team. Once that happens, you won't need to worry (so much) about which person should do what or which hats (roles) people should wear when.

In this blog category, I'll start giving capsule descriptions of the traits I think people like me should infect a team with. It's not that I think tester-people uniquely possess these traits; it's just that they're characteristic of testers, so testers make great carriers.

Background reading: Bret Pettichord's "Testers and Developers Think Differently".

## Posted at 08:54 in category /traits

[permalink]

[top]

Cybernetics, agile projects, and active adaptation

I just read the first chapter of Andrew Pickering's forthcoming The Cybernetic Brain in Britain, a history of - and reflection on - the British cyberneticians from the 50's on.

As Pickering tells it, these people were concerned with the brain, but not as an organization of knowledge, a sack of jelly in which representations of the world are stored and processed.

What else could a brain be, other than our organ of representation? ... As Ashby put it in 1948, 'To some, the critical test of whether a machine is or is not a "brain" would be whether it can or cannot "think". But to the biologist the brain is not a thinking machine, it is an acting machine; it gets information and then it does something about it' (1948, 379). The cyberneticians, then, conceived of the brain as an immediately embodied organ, intrinsically tied into bodily performances. And beyond that, they conceptualised the brain's special role to be that of an organ of adaptation. The brain is what helps us to get along in, and come to terms with, and survive in, situations and environments we have never encountered before... the cybernetic brain was not representational but performative, as I shall say, and its role in performance was adaptation.

This gives me a couple of thoughts. First, we can think of the agile bullpen, containing people, furniture, and source code, as an embodied organ of adaptation. The team is something that gets information and does something about it (change the code). The better the team, the more flexibly adaptable it will be, and the better it will survive in the business world.

(I'm picking the bullpen as the unit of adaptation because I want to talk myself out of the notion that everything that matters is in the heads of the team members - some of it is "in" the configuration of the room, the Big Visible Charts, the source code, the Lava Lamps, the rituals that people take part in and the rules they follow and reinterpret. Also, I'm making a bit of reference to Searle's Chinese Room critique of AI, though I'm not sure to what end.)

The second thought ties in with this quote:

Norbert Wiener's basic model for the adaptive brain, the servomechanism, is, in one sense, a passive device. A thermostat simply reacts to unpredictable fluctuations that impinge upon it from its environment. If the temperature in the room happens to go up, the thermostat turns down the heating, and vice versa. In contrast, the distinctive feature of Walter and Ashby's models is that they were active. They interrogated their environments and adapted to what they found. Walter's tortoises literally wandered through space, searching for sources of light. Ashby's homeostats stimulated their environments with electrical currents and received electrical feedback in return. Such cybernetic devices, one could say, enjoyed a relationship with their environment which was both performative - the devices acted in their world, and the world acted back - and experimental: they explored spaces of possibility via these loops of action and reaction.

Agile projects are not as passive as a thermostat. They stimulate the world by releasing software, causing the world-of-the-business to stimulate the project back. But there seems to be an emerging critique of the current stage in Agile that accuses it of being too passive. Tim Lister's keynote at Agile Development Conference charged the listeners to do more than passively accept requirements from users: instead we should exhibit (and develop) expertise in what users need. The new Agile-Usability list kicked off with some blasts at what can happen when a business expert who doesn't understand usability creates a UI feature by feature and the programmers passively do what she wants. Mary Poppendieck's keynote at XP/Agile Universe talked of the need to deliver a whole product to the business, and others appalled by how often successful-in-their-own-terms Agile projects get taken down by organizational struggles and interests are trying to figure out how to convert receiving organizations into something Agile enough to really use their Adaptive Bullpens well.

In so-far-sketchy conversations with Pickering, we've both been struck by similarities between Agility (as I describe it) and the cyberneticians (as he describes them). Perhaps we of today can learn from the cyberneticians of yesteryear - not least, how to avoid their fate: because that polyglot, promiscuous field has pretty much vanished, except from fond memories. The anti-discipline that produced von Foerster's "Act always so as to increase the number of choices" is itself no longer a choice.

## Posted at 17:48 in category /agile

[permalink]

[top]

Norm Kerth is the author of Project Retrospectives, an early proponent of patterns, and a nice guy. He suffered a disabling brain injury in a car accident and can't work for sustained periods.

Karl Wiegers has bunches of shareware process aids (mostly Word and Excel templates for various things). Net proceeds will go to the Norm Kerth Benefit Fund.

A worthy cause. And you ought to buy Norm's book, too. It's a good and useful read, in the Weinberg style.

## Posted at 12:22 in category /misc

[permalink]

[top]

Read The Fine Manual (or FAQ). Jason Yip and Alan Francis and Ron Jeffries solve my problem:

From http://confluence.public.thoughtworks.org/display/CC/FrequentlyAskedQuestions:

Q: I see just a dark blue screen when I look for build results and my tomcat log has the following exception: org.apache.xml.utils.?WrappedRuntimeException: The output format must have a ' http://xml.apache.org/xslt}content-handler' property!'. What's going on and how do I fix it?

A: JDK1.4 includes an old version of xalan, try installing a new xalan.jar (from http://xml.apache.org/xalan-j/downloads.html) into tomcat_dir/common/endorsed.

I knew I'd be publicly shamed by asking, and this is a particularly embarrassing way, but look: I got the answer three waking hours later. Thank you, Jason, Alan, Ron, and - especially - Eric Bina.

## Posted at 06:30 in category /misc

[permalink]

[top]

Here I am in Omaha, fiddling with Cruise Control (2.1.6). Once I found that Mike Clark had made available the relevant chapter from his fine Pragmatic Project Automation, things became much easier.

But I still can't get Tomcat to spit out the formatted build log because of this evil exception:

org.apache.jasper.JasperException: The output format must have a '{http://xml.apache.org/xalan}content-handler' property!

I just know that I'm doing something stupid and obvious, maybe even to a newbie like me, definitely to a Control freak who groks XSLT and all the steps that lead from a simple build to a pretty web page. Here's hoping that this posting will lead to a quick mail with the simple'n'obvious fix. Send it and I'll owe you lunch.

(I know: "use the source, Luke". I don't have time just now.)

Full error page here.

## Posted at 20:50 in category /misc

[permalink]

[top]

I'm uncomfortable with public talk about politics, but this election is especially important.

I've long been broadly sympathetic to Democratic goals, yet often suspicious of Democratic approaches and reflexes. I've voted for candidates of both major parties. Until this year, I'd never put a political bumper sticker on my car (unless you count "Free the Mouse"), and I'd never donated a dime to any politician or party.

Now I find myself with Kerry bumper stickers on both cars and a big credit card bill for donations.

The current administration is incompetent at policy execution, especially at sweating the details, especially at keeping ideology from interfering with practical results, especially at adjusting when circumstances change.

That's my core objection, pragmatist that I am, but I can also be a stiff-necked moralist. This administration promised to bring honor and dignity back to the White House. They did not. They evade responsibility. They are willing to benefit from the dirty work of others. They resolutely not-quite-lie to create beliefs (like an involvement of Iraq in 9/11), or they withhold information it is their duty to disclose (like the true cost of the Medicare bill). They pander to the country's moral flaws of fearfulness and spite. Condemning others while taking the easy path is not honorable.

They're my employees, they've done a lousy job, and I want to fire them.

I now return to my normal topics.

## Posted at 10:44 in category /misc

[permalink]

[top]

After XP/Agile Universe, I stayed in Calgary for a day. Instead of going to Banff like a normal person would, I spent that day visiting two companies that had started to drive their projects with business-facing examples. Although they weren't yet doing the style of development I advocate in the book, I thought I could learn from them. I did. But I learned in a particular way. Instead of interviewing them about their practices with an eye toward filling up the book, I rapidly slipped into my normal consulting role: conversing with an eye toward offering advice and answering their questions. A couple of people worried that I wasn't getting what I came for, that they were just getting free consulting. They were, but I also got what I came for. (And who knows, a consulting gig might come of one of them.)

I'd like to do more of that. If you're interested and in one of the places listed below, contact me. The only rules are that you'll have to drive me between places, and that you be a project that's making a concerted effort to use examples/tests to understand the problem to be solved and to explain it to programmers. You don't have to consider that effort a smashing success - though some of that would be nice - you just have to be trying.

Here are the places I'll be for less than a whole week, making it easy to extend my trip:

## Posted at 08:19 in category /agile

[permalink]

[top]

WATIR is a project headed by Bret Pettichord and Paul Rogers. They're going to take the existing Web Testing in Ruby framework and push it forward full throttle. This will be a project to watch - and to contribute to.

## Posted at 06:44 in category /testing

[permalink]

[top]

Dave Thomas has a masterful blog entry titled Weeding Out Bugs. Check it out.

## Posted at 06:44 in category /coding

[permalink]

[top]

In my XP/Agile Universe keynote, I cited a bunch of books. Someone asked that I post them, so here they are.

One bit of evidence that test-driven design has crossed the chasm and is now an early-mainstream technique is JUnit Recipes, by J.B. Rainsberger. This is a second-generation book, one where he doesn't feel the need to sell test-driven design. He's content (mostly) to assume it.

I asked how it could be that Agile projects proceed "depth-first", story-by-relatively-disconnected-story, and still achieve what seems to be a unified, coherent whole from the perspective of both the users and programmers. My answer was the use of a whole slew of techniques.

At the very small, programmers are guided by simple rules like Don't Repeat Yourself. Ron Jeffries' Adventures in C# is a good example of following such rules. Martin Fowler's Refactoring is the reference work for ways to follow those rules.

At the somewhat higher level, we find certain common program structures: patterns, as described in Design Patterns. Joshua Kerievsky's new Refactoring to Patterns shows how patterns can be the targets of refactoring. You can think of patterns as "attractors" of code changes.

There are even larger-scale structures as described in Eric Evans's Domain-Driven Design, Fowler's Patterns of Enterprise Application Architecture, and Hohpe&Woolf's Enterprise Integration Patterns.

I was resolutely noncommittal about whether the larger-scale patterns can emerge from lower-level refactorings or whether pre-planning is needed. I tossed in Hunt and Thomas's The Pragmatic Programmer both because it (and they) speak to this issue and because it seems to fit somehow in the "architectural" space.

Finally, I addressed an issue that's been weighing on my mind for more than a year. Dick Gabriel has said (somewhere that I can't track down precisely) that there are two approaches to quality. The one approach is that you achieve quality by pointing out weaknesses and having them removed. The other is that you build up all parts of the work, including especially (because it's so easy to overlook) strengthening the strengths.

Testers too often take the first approach as a given. They issue bug reports, become knowledgeable discussants about the weaknesses of the product, and think they've done their job. And they're right, on a conventional project. I've decided I don't want that to be their job on an Agile project; instead, I want them to follow the second approach. I want them to apply critical thinking skills to the defense and nurturing of a growing product.